AI-generated video has become the new king in the game. Less than 48 hours after it went online, netizens have hit the screen with "blockbuster movies" X

AI video generation tool rolled up!

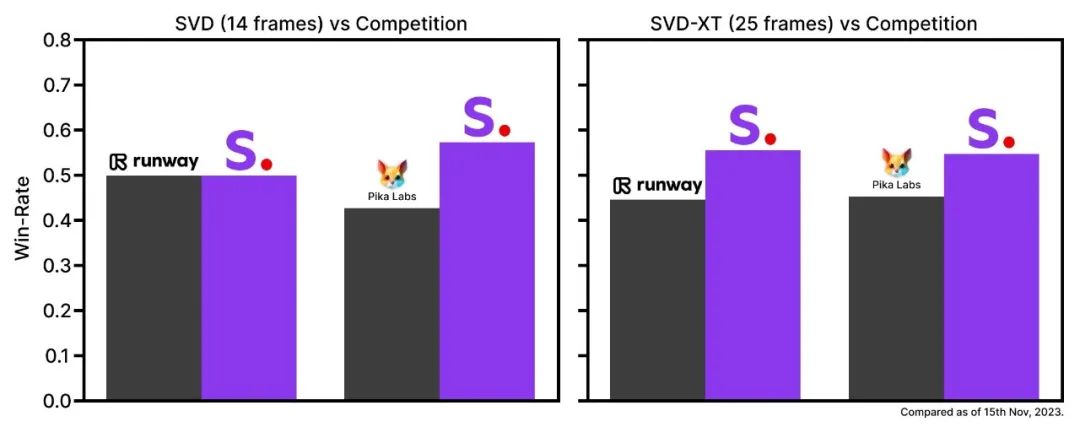

On Tuesday, Stability AI quietly released its first open source AI video model, Stable Video Diffusion (hereinafter referred to as SVD). It also released test data saying that the model performance of SVD crushed leading closed-source business models such as RunwayML and Pika Labs.

You may not know Stability AI, but must have heard of its development, the same open source hot Vinson diagram model Stable Diffusion (hereinafter referred to as SD), many people first contact AI painting, and even the field of AIGC, are from SD.

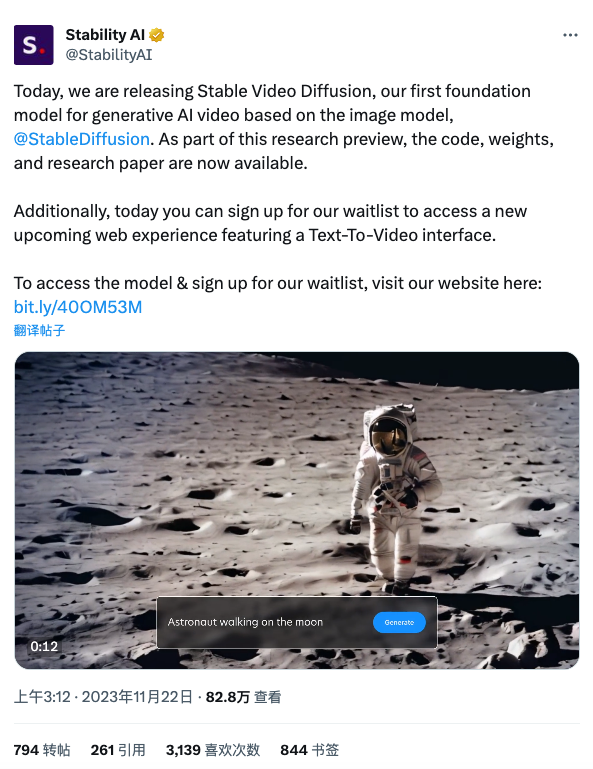

This Stability AI end, an accidental picture and open source AI video model, now has a set of images, language, audio and other fields of open source large models, attracted a lot of users have said "finally waiting for you".SVD online news less than 48 hours, X page views broke 800,000.

With so much popularity, how is SVD performing?

Similar to the functions of mainstream AI video generation models on the market, SVD supports users to input text or images to generate videos.

What is the specific effect? First feel the shock of the official release.

Text to video:

Image to video

For the "Image to Video" function, Stable Video Diffusion provides two versions of the model. Users can generate SVD (14 frames) and SVD-XT (25 frames) videos at a custom frame rate between 3 and 30 frames per second.

Simply understand, the higher the frame rate, the smoother the video picture.

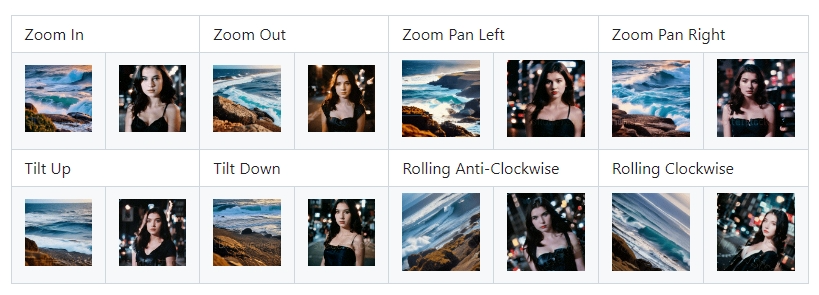

It is worth mentioning that Stable Video Diffusion can also generate video content from multiple perspectives based on a single input image. For creative video workers, this feature is extremely explosive.

For example, in the past, AI could generate multi-angle video material from a single picture, which used to require a lot of effort to actually shoot, greatly saving shooting manpower and time.

As Stability AI joins the melee of video generation models, some netizens can't help but sigh: "First RunwayML, then meta, and now Stable Diffusion. I'm afraid it won't be long before we can use AI to complete an entire movie."

No, as it comes, netizens’ “blockbuster” has already flooded the screen (Twitter).

"Burning Tree":

"Van Gogh's Moving Starry Night"

"The future world imagined by AI":

"3D Sunflower"

One of the videos that went viral on X was so silky and smooth that it was so real that it made people wonder whether a game screen recording was mixed into the information flow.

This video comes from X blogger "fofr". According to him, the reference image fed to SVD was generated by Midjourney, and the final result shocked even him: "It's like a real game lens."

Comparing the original reference pictures with the video, we can’t say they are very similar, we can only say they are exactly the same.

The first wave of X bloggers to try it, "Guizang", used SVD to generate a set of videos including portraits, flowers, and natural scenery. There are both surreal sea scenery, as well as portraits and natural flowers that look like real shots in movies.

After some testing, "Gui Zang" believes that SVD can automatically determine where to move and how to move, and will not have the problem of screen collapse like Runway when generating complex content (such as human faces).

Notice! This is not a "Zelda" game screen recording, but an AI video generated by SVD based on game screenshots

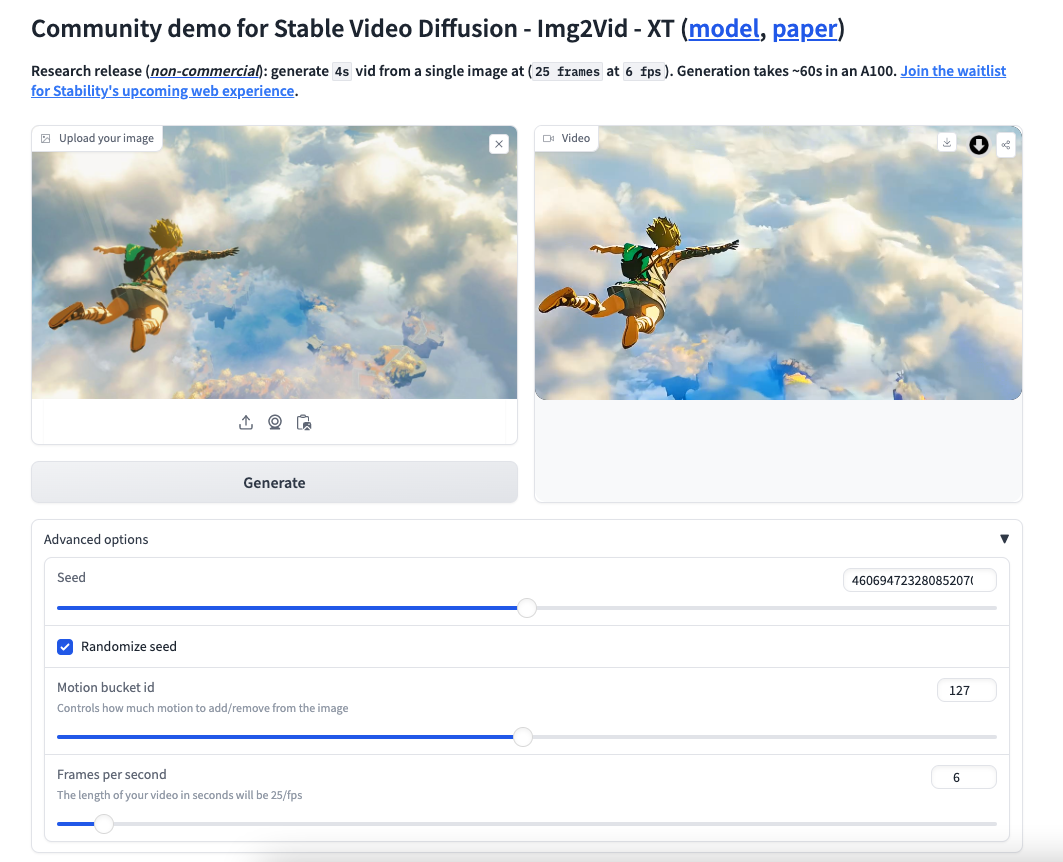

At present, SVD has only released basic models. Players who want to experience SVD but suffer from insufficient hardware capabilities can go to HuggingFace to experience SVD’s “picture making video” function.

Enter the interface, drag in the picture on the left, and the generated video on the right. Click Advanced Options to select the seed number, control the amplitude of motion, adjust the frame rate, etc. After setting, click "Generate" to generate the video.

A battle between AI video generation tools

In the past half year, AI video generation tools have undergone a wave of intensive upgrades.

In July 2023, the "dark horse" Pika labs began internal testing. Users can generate videos by entering text commands through the chat box. Then Pika Labs also launched the function of "pictures and text to generate videos", and the effect was once praised as comparable to Runway.

In October, the start-up Moonvalley shifted from AI image/text generation to AI video generation, launching what it calls "the most powerful video generation AI in history", which can generate 16:9 movie-quality high-definition videos with just one prompt.

At the end of October, Genmo released version 0.2 of Replay, which launched the function of converting pictures to videos and increased the resolution by 2.7 times.

During the same period, the open source framework AnimateDiff became popular on the Internet and was favored for its high controllability and high quality of generated animated videos.

The AnimateDiff team also updates frequently. From the beginning, it could only generate 16 frames of animation. After the V2 version was updated in September, it could generate 32 frames of animation at a time, which greatly improved the quality of animation generation. LoRA lens control function was also launched later, helping users obtain more accurate generation effects.

Entering November, Runway launched a "milestone update", increasing the Gen-2 resolution to 4K and significantly improving video fidelity and consistency.

Last week, social media giant Meta launched two new AI video editing features that will soon be introduced to Instagram or Facebook. Among them, Emu Video can automatically generate four-second videos based on subtitles, images, text descriptions, etc.

Emu Edit allows users to modify or edit videos through text commands. Ordinary people without professional image editing experience can do post-production with just a few clicks.

Recently, Gen-2’s “movement brush” that allows you to draw wherever you want has also been officially launched.

Each company's pursuit of each other not only makes the product effects and user experience better and better, but also quietly changes every ordinary person's perception of video creation.

Nowadays, the entry of Stability AI makes people look forward to the future direction of AI video generation tools. Do you think making videos will be as easy as typing in the future?