Five-minute quick tour of the OpenAI conference: GPT-4 Turbo model, GPT application store, etc. debut

Financial News Agency, November 7 (Editor Shi Zhengcheng) On Monday, local time, the first developer conference in the history of OpenAI, the leader in the artificial intelligence industry, was unveiled . In a nearly 45-minute opening speech, OpenAI CEO Sam Altman showed global developers and ChatGPT users a series of upcoming product updates from the AI leader.

At the beginning of the press conference, Altman briefly reviewed the company's development history over the past year, specifically mentioning that "the GPT-4 released by the company in March this year is still the most powerful AI large model in the world." Today, 2 million developers are using OpenAI’s API (Application Programming Interface) to provide a variety of services around the world; 92% of Fortune 500 companies are using OpenAI’s products to build services, and ChatGPT’s weekly The number of active users has also reached 100 million.

GPT-4 Turbo model debuts

Then it was time for new product releases, and the first one to appear was the GPT-4 Turbo model.

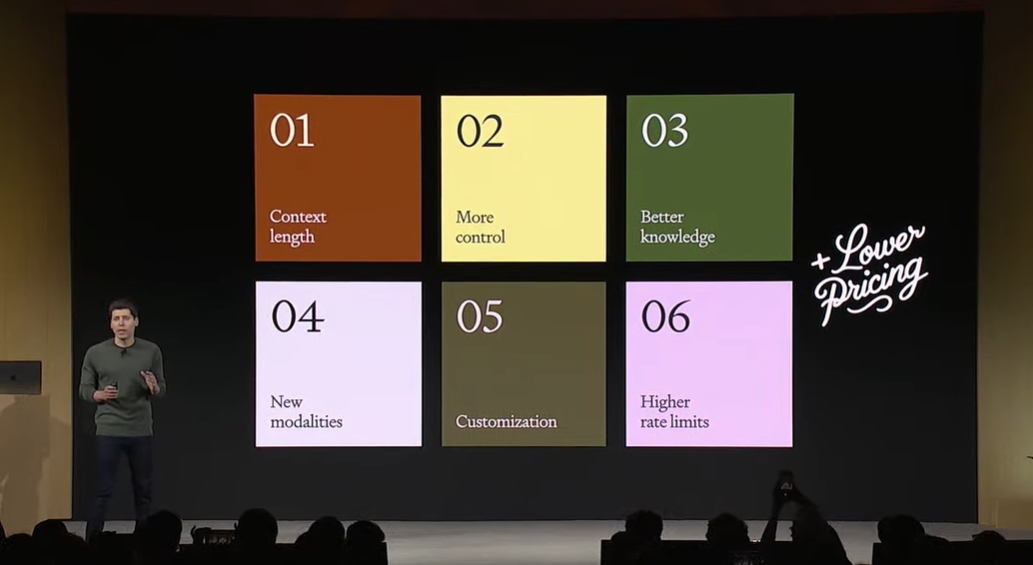

To put it simply, compared with GPT-4, which is familiar to investors around the world, the progress of GPT-4 Turbo is mainly reflected in six aspects.

1. AI can understand longer context conversation length (context length). The standard GPT-4 model supports up to 8192 tokens, and previous upgrades increased this to a maximum of 32,000 tokens. GPT-4 Turbo supports up to 128,000 tokens, which is equivalent to the amount of text contained in a standard-sized 300-page paper book; Altman also said that the accuracy of the new model in processing long text situations has also improved. ;

2. Give developers more control. The new model allows developers to instruct the model to return valid JSON in a specific form—JSON Schema. At the same time, developers can access the seed parameter and system_fingerprint response field to achieve "the model gives deterministic output for each request."

3. GPT-4’s knowledge of the real world is as of September 2021, and GPT-4 Turbo’s knowledge is as of April 2023.

4. Multimodal API is coming. The Vincent graph model DALL·E 3, GPT-4 Turbo with visual input capabilities, and the new sound synthesis model (TTS) are all entering the API today. OpenAI also released a new speech recognition model Whisper V3 today and will provide an API to developers in the near future.

5. Following the opening of GPT 3.5 fine-tuning to global developers, OpenAI announced that it will provide active developers with GPT-4 fine-tuning qualifications. For developing vertical AI applications in subdivided industries, fine-tuning is a necessary process. For this type of developers, OpenAI has also launched a customized model project to help some organizations train customized GPT-4 models for specific fields. Altman also said that this matter will not be cheap at the beginning.

6. OpenAI has doubled the Token rate limit for all GPT-4 users, and developers can apply to further increase the rate.

Similar to Microsoft and Adobe, OpenAI has also introduced a "copyright shield" mechanism. When users of ChatGPT Enterprise Edition and API users are subject to copyright lawsuits, the company will defend them and pay the resulting liability for compensation.

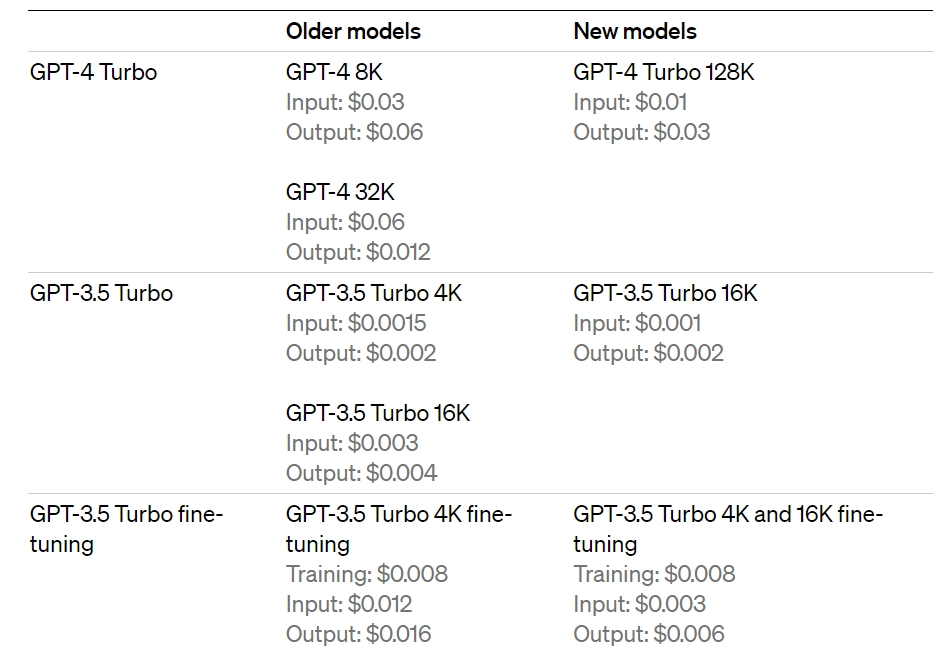

In terms of pricing, which is of great concern to the market, as the industry-leading large model, the price of GPT-4 Turbo is much lower than that of GPT-4. The price of the input Token is only one-third, while the price of the output Token is only half. In other words, the price for inputting 1,000 Tokens is 1 cent, and the price for outputting 1,000 Tokens is 3 cents. At the same time, the price of the GPT-3 Turbo 16K model has also been reduced.

(New pricing table, source: OpenAI)

During the break between new product launches, Microsoft CEO Nadella also came to the scene, praised OpenAI and once again emphasized that "Microsoft loves OpenAI deeply."

ChatGPT also has updates

Ultraman announced that although today is a developer conference, OpenAI cannot help but make some updates to ChatGPT.

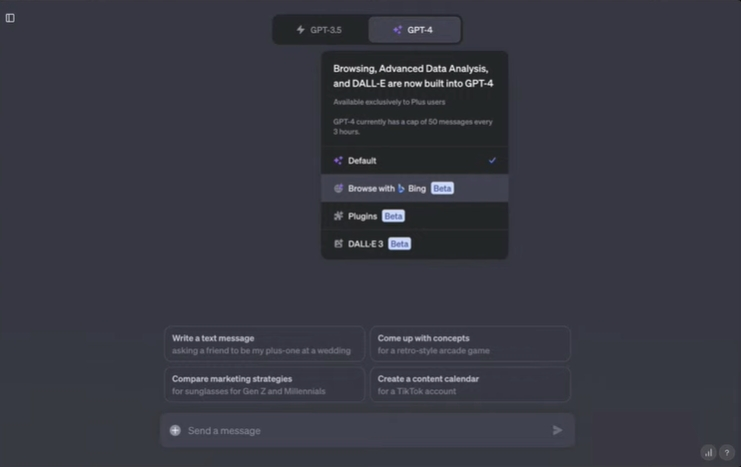

First, starting today, ChatGPT will use the just-released GPT-4 Turbo model. In addition, in order to solve the problem that users have to choose different modes before each conversation, GPT-4 Turbo will also receive an update to its product logic. Now the robot can actively adapt to the corresponding functions based on the conversation.

Before update ⬇

After update ⬇

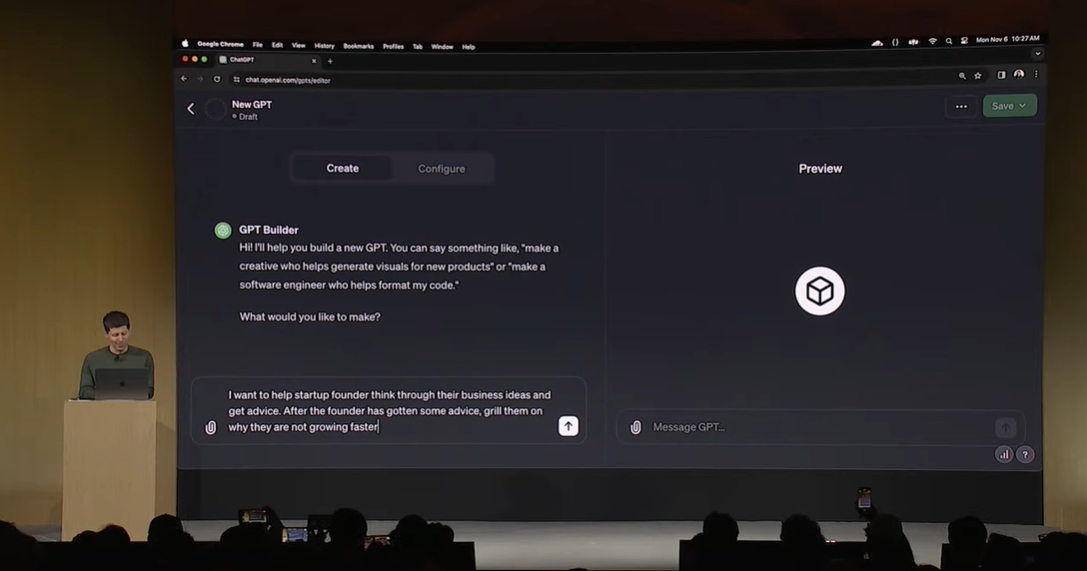

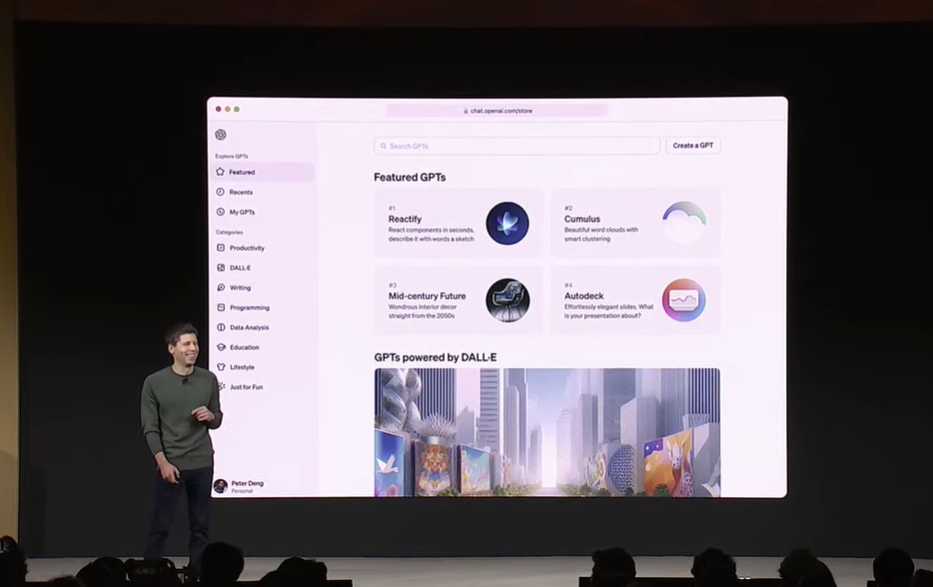

Next, the second important product of this event will be launched - GPTs. Users will be able to build their own GPT by customizing instructions, expanding the knowledge boundary (of the model) and issuing action commands, and can publish it to more people around the world. What's more, the entire process of building a "custom GPT" is also formed through natural language conversations.

Ultraman also demonstrated how to build a GPT through chat. His instructions to GPT Builder were "I hope to help entrepreneurs think about business ideas and provide suggestions, and then 'torture' them about why their companies are not growing fast enough."

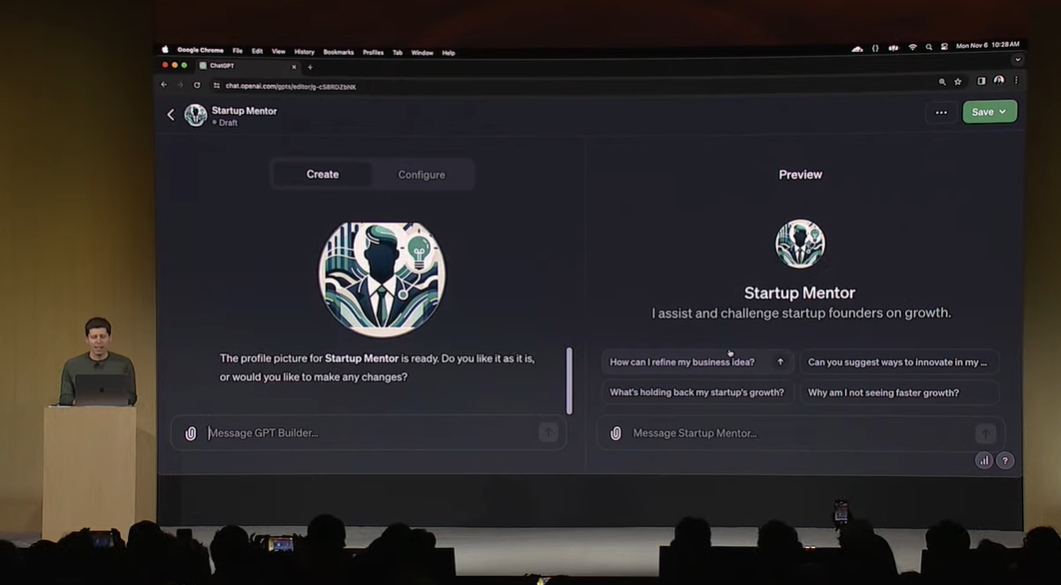

Then ChatGPT quickly built an entrepreneurial consulting GPT and even generated a logo.

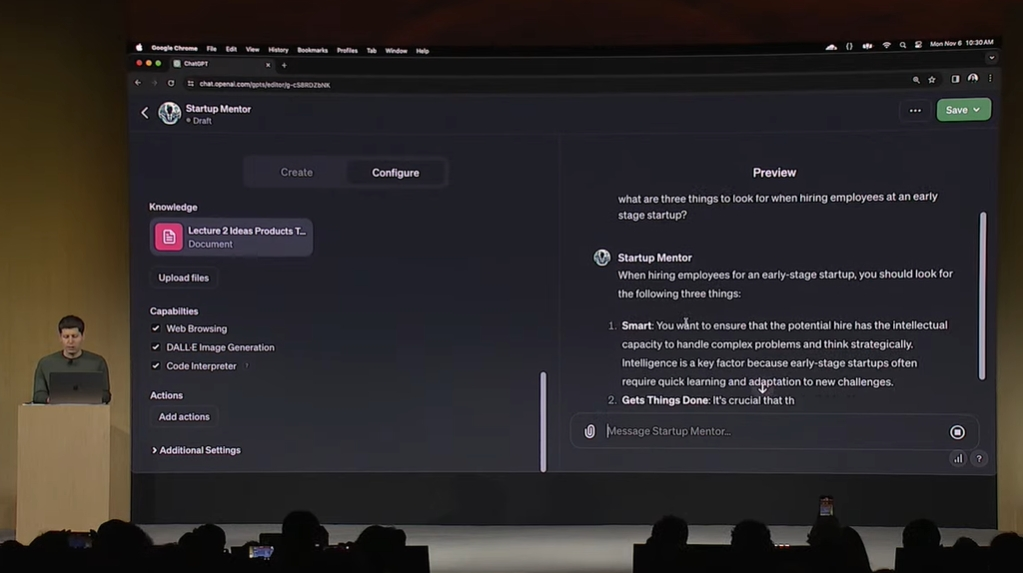

Altman then uploaded a copy of his own talk about startups in the properties page, providing additional knowledge to this use case. At this point, the initial construction of the customized GPT is completed. Users can save this use case only for their own use, or publish it publicly.

Now that we are talking about "public release", OpenAI also announced that it will be launched on the "GPT App Store" later this month. For the most popular GPTs, the company will also share a portion of the revenue to promote the progress of the GPT application ecosystem.

Assistants API

Finally, there is the new Assistants API for developers. An “Assistant API” is a purpose-built AI with specific instructions, leveraging additional knowledge, and the ability to call models and tools to perform tasks. The new Assistant API provides functions such as code interpreters, retrieval, and function calls to handle much of the heavy lifting that developers previously had to do themselves.

According to OpenAI, the scope of use cases of this API is very flexible, such as natural language-based data analysis applications, programming assistants, AI vacation planners, voice-controlled DJs, intelligent visual canvases, etc.

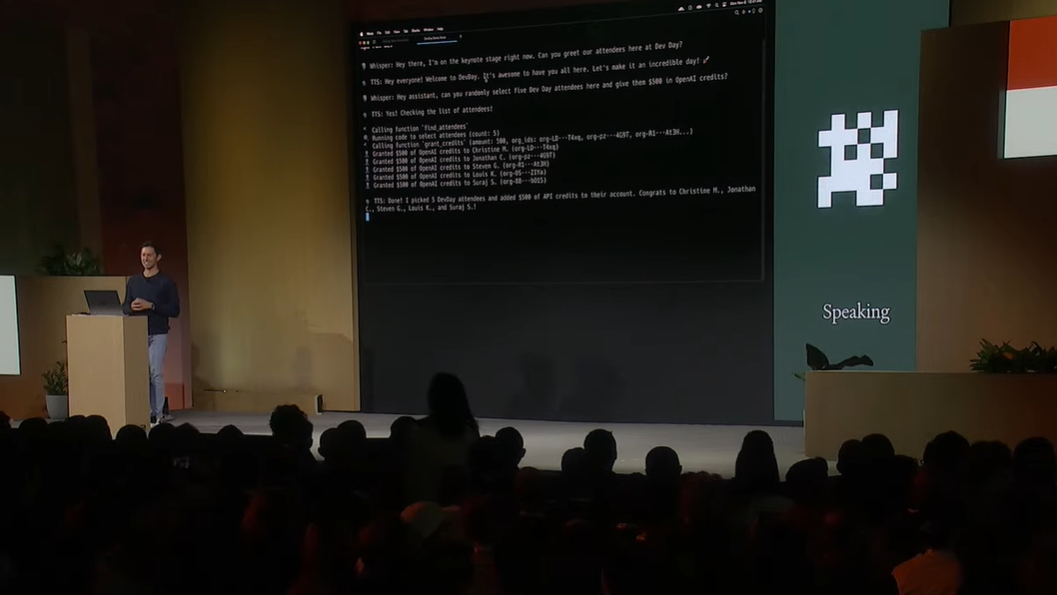

As an example, OpenAI's chief developer experience officer Romain Huet built a use case of "knowing the entire developer conference" and called Whisper to implement voice input.

At the same time, because this API can be connected to the Internet, Romain also used voice instructions on the scene to randomly select 5 live audience members and recharge $500 into their OpenAI accounts.

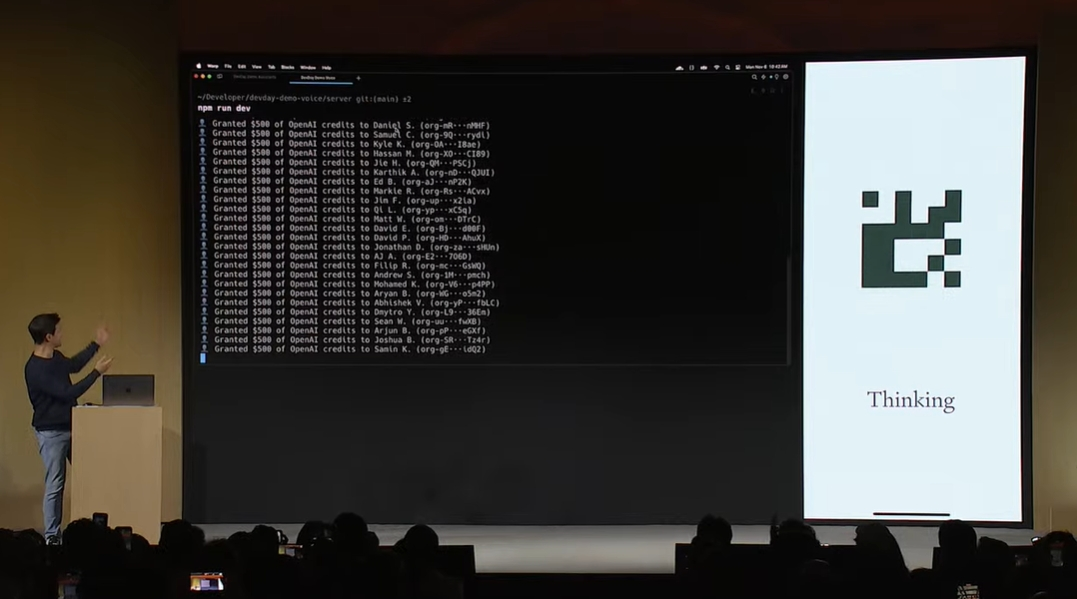

As the last surprise of this event, Romain once again issued instructions to the AI and transferred $500 to the accounts of everyone present.

Special statement: This article was uploaded and published by the author of NetEase's self-media platform "NetEase Account" and represents only the author's views.