Stable Diffusion is another epic enhancement, which increases the drawing efficiency by 5 times.

The speed of AI painting evolution is really too fast! ! The recently popular new generation generative model LCM has swept across the scene, which can greatly improve the efficiency of drawing.

Let’s try it together today!

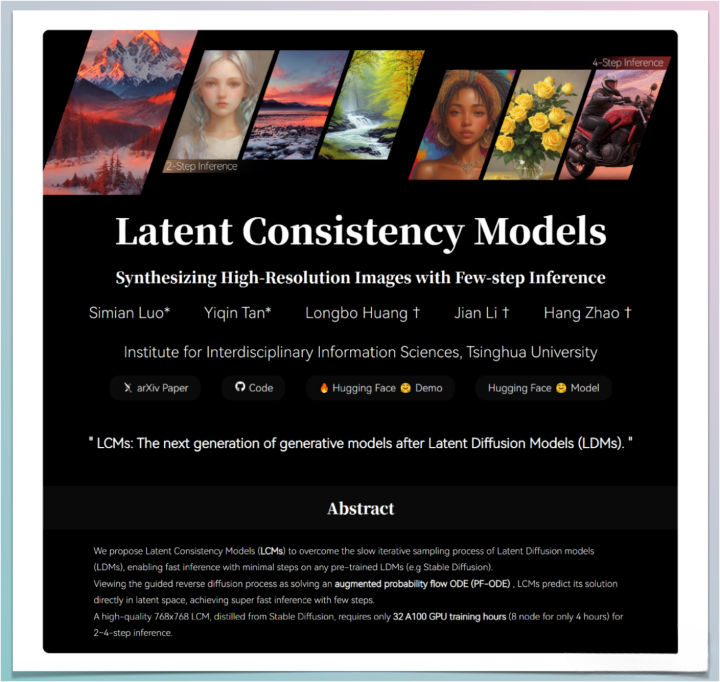

Introduction to LCM

LCM, the full name of Latent Consistency Models, is a generative model developed by the Institute of Interdisciplinary Information Science of Tsinghua University.

Its characteristic is that it can synthesize high-resolution images through inference in a small number of steps, making image generation 2-5 times faster and requiring less computing power.

Officially, LCMs are a new generation of generative models following LDMs (Latent Diffusion Models).

If you have learned about the image rendering process of Stable Diffusion before, we should know that the image rendering process of Stable Diffusion is actually to remove noise points from a pure noise image and finally turn it into a clear image, which takes about 20-50 steps ( That is the number of steps we set)

What LCM emphasizes is not to iterate step by step, but to directly pursue "one step to get it right". LCM introduces Lantent Space based on Consistency Models to further compress the amount of data that needs to be processed, thereby achieving ultra-fast image reasoning and synthesis. It only takes 2-4 steps to complete!

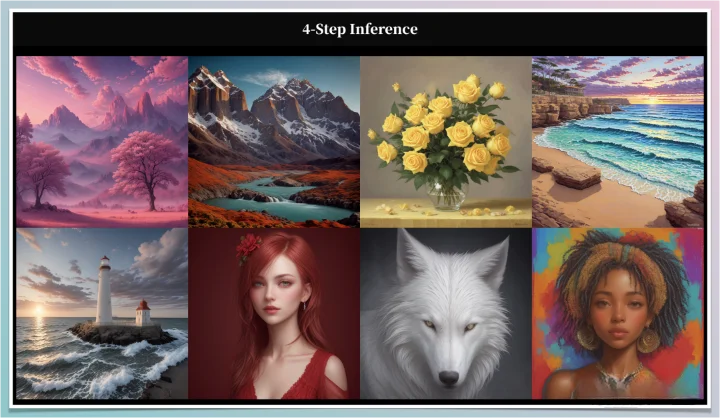

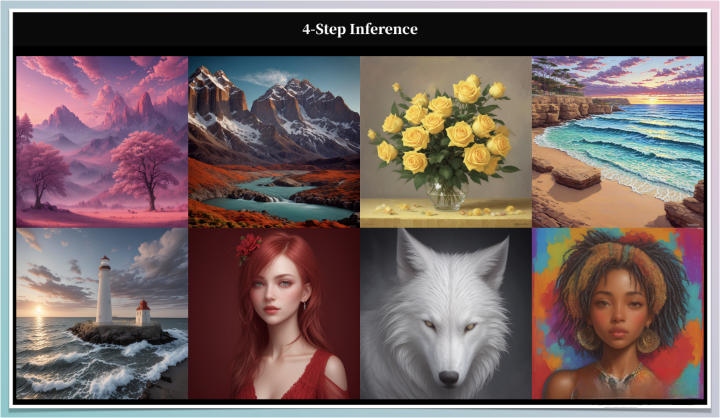

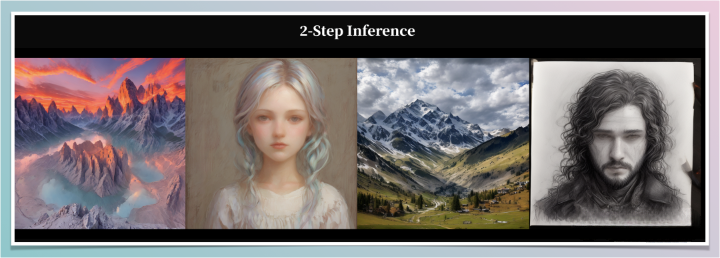

You can take a look at the renderings first:

4 step renderings

2 step renderings

1 step renderings

Try it out

There are currently three ways to play, you can choose to use them

Play directly online

Text to Picture:

https://huggingface.co/spaces/SimianLuo/Latent_Consistency_Model

Picture to Picture:https://replicate.com/fofr/latent-consistency-model

Used based on Dreamshaper-V7 large model

Currently, the official website only provides Dreamshaper-V7 and LCM-SDXL, which are two models that can be used in Stable Diffusion. And the LCM plug-in needs to be installed.

Plug-in address: https://github.com/0xbitches/sd-webui-lcm

Model address: https://huggingface.co/SimianLuo/LCM_Dreamshaper_v7/tree/main

lcm-sdxl: https://huggingface.co/latent-consistency/lcm-sdxl

Based on LCM-LoRA use

In addition to the above large models, the official also provides a LoRa model, which can currently be used with all large models of SD1.5 and SDXL.

The LCM-LoRA model is currently available in ComfyUI and Fooocus

SD1.5 lora: https://huggingface.co/latent-consistency/lcm-lora-sdv1-5

sdxl lora: https://huggingface.co/latent-consistency/lcm-lora-sdxl

After using LCM, the overall rendering effect has indeed been greatly improved, but the quality is still a little worse.

For now, you can still try it out, and you can still play with it. We will share more about LCM in the future when there are more enhancements!