Strongest AI chip NVIDIA H200 released late at night, Llama2-70B inference speeds up 90%, ships in Q2 2024

NVIDIA has unveiled the H200, the world's most powerful AI chip to date, at the Supercomputing Conference 2023 (SC2023).

The new GPU is based on the H100 and features a 1.4x increase in memory bandwidth and a 1.8x increase in memory capacity, improving the ability to handle generative AI tasks. NVIDIA's innovations in hardware and software are creating a new breed of AI supercomputing," said Ian Buck, vice president of the company's high-performance computing and hyperscale data center business.

The NVIDIA H200 Tensor Core GPUs deliver game-changing performance and memory capabilities to enhance generative AI and high-performance computing (HPC) workloads," NVIDIA said in an official blog post. As the first GPU to feature HBM3e, the H200 accelerates generative AI and large-scale language models (LLMs) with larger and faster memory, while advancing scientific computing for HPC workloads.

In addition, Buck demonstrated a server platform that connects four NVIDIA GH200 Grace Hopper superchips via the NVIDIA NVLink interconnect. The quad-core configuration features up to 288 Arm Neoverse cores and 16 petaflops of AI performance in a single compute node, as well as up to 2.3 TB of high-speed memory.

The NVIDIA H200 is based on the NVIDIA Hopper architecture and is compatible with the H100, meaning that AI companies already training with previous models will be able to use the new version without changing their server systems or software.

The H200 is the first GPU to deliver 141 GB of HBM3e memory at 4.8 TB/s, which is nearly double the capacity of NVIDIA's H100 Tensor Core GPU. H200 also comes with up to 141GB of ultra-large graphics memory, nearly double the capacity of the H100's 80GB, and a 2.4x increase in bandwidth. The H200's larger and faster memory accelerates generative AI and LLM while advancing scientific computing for HPC workloads with greater energy efficiency and lower cost.

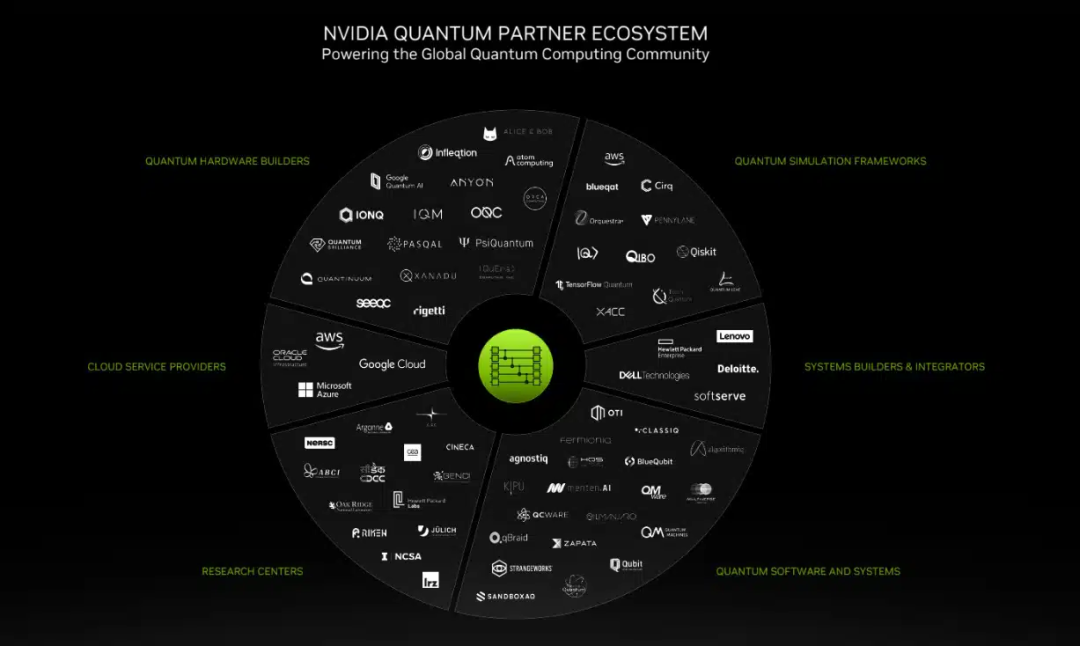

Starting next year, Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be the first cloud service providers to deploy H200-based instances, NVIDIA said.

At the same time, the SC23 conference also released the latest edition of the TOP500 list of supercomputers. the Frontier system remains at the top of the list and is still the only tens of billions of times computer on the list, but the landscape of the top ten has been altered by five new or upgraded systems.

Based on the powerful performance, NVIDIA will provide better arithmetic services to supercomputing centers around the world in the future. Just at SC23, several supercomputing centers announced that they are integrating GH200 systems for their supercomputers.

For example, the Jülich Supercomputing Center in Germany will use the GH200 supercomputer chip in "Jupiter" (JUPITER), which will become Europe's first tens of billions of times supercomputer.